ChatGPT and AI snapshot

Photo: The Alchemist, David Rijckaert III Antwerp, 1661

Starwatcher is out there, always observing the startup universe and taking notes. This is an AI snapshot.

The tsunami of "GPT-Chat" couldn't be missed. It swept through the internet and it will probably change the way we consume information forever, just like Google did back in 1998 when we switched from browsing information catalogues to "smart search".

This is a great opportunity to make an AI snapshot that can be reviewed in the future.

What is ChatGPT?

In short - it is a large statistical language model. To better understand this let's look at this in context and compare it to other well known information tool - Google.

Consider internet as big (very big) pile of information and lets see how this information is viewed/represented by ChatGPT and Google.

Google's paradigm has been to gather, index, and sort this information based on its importance. And selling ads (Literally.) This is done using the PageRank algorithm, which determines the importance of a particular source of information based on the number of other sites that link to it. This was a major breakthrough in 1998, when other search engines simply provided lists of links. It changed the paradigm and minds were blown at the time. Over time, Google has added new data dimensions to improve its search results, but the core PageRank algorithm remains an important signal for evaluating the quality of a source.

On the other hand, OpenAI's ChatGPT (as well as GPT-3 and other similar applications) is a statistical language model. Instead of understanding the sources of information, it tries to understand the information itself. This allows it to generate text that is similar to human writing and can be used for a variety of natural language processing tasks.

How does a large statistical language model actually work? The details are beyond the scope of this article, but here is a general concept that is pretty simple. The task of the model is to figure out what the next word in a sentence will be. For example, if the sentence starts with the word "My," possible next words might be "day," "work," or "significant other" (ok, these are already two words). Now, if the sentence is "My work," the model might predict that the next word will be "is."

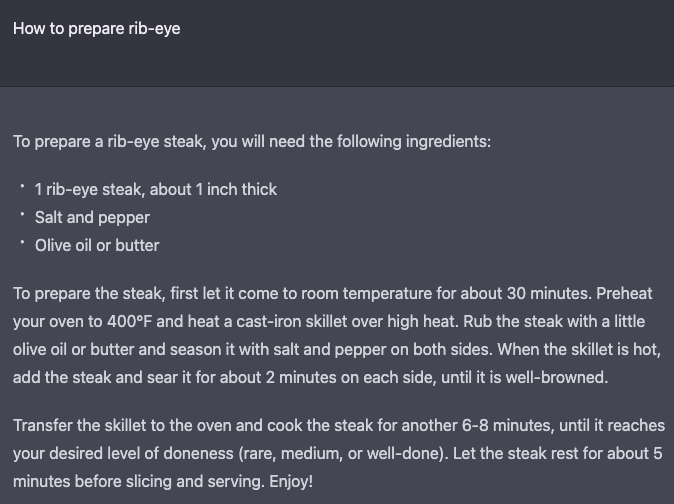

You might think that this sounds too simple to work. Yes, if you have small dataset. But if you happen to have 45 terrabyte dataset, it can learn the patterns and nuances of natural language. This allows it to "understand" the context and sentiment of the text, and even the style of writing. For example, if we are interested in steak recipes, it will know that words like "ribeye steak," "cast iron," and "seasoning" are often used together. Based on this knowledge, the model can generate text summaries, make translations, and answer questions. Or in our case, come up with a steak recipe

ChatGPT is a conversational interface to this model. You can ask a question or query, and it will give you an answer. You can even ask follow-up questions related to the initial question, just like you would if you were talking with a human expert.

Google has a catalog of information and an algorithm to direct you to the most (hopefully) relevant sources, so you can find the answer to your question. ChatGPT, on the other hand, is an interface that will give you the right (fingers crossed) answer to your question. Of course, there are some drawbacks to this approach.

What it is not?

Is it magical AI? Sorry kid!. It is statistical language model. Very big one but still statistical representation of a language. Algorithms allow the model to understand which words are likely to occur together in a sentence. In many cases, this can produce results that are very good, but the model does not have an understanding of meaning or any idea of itself. It cannot make predictions or point you to a source of information, because it has no understanding of the real world. It knows that these words go together because "I have this algorithm which has analysed this big chunk of information".

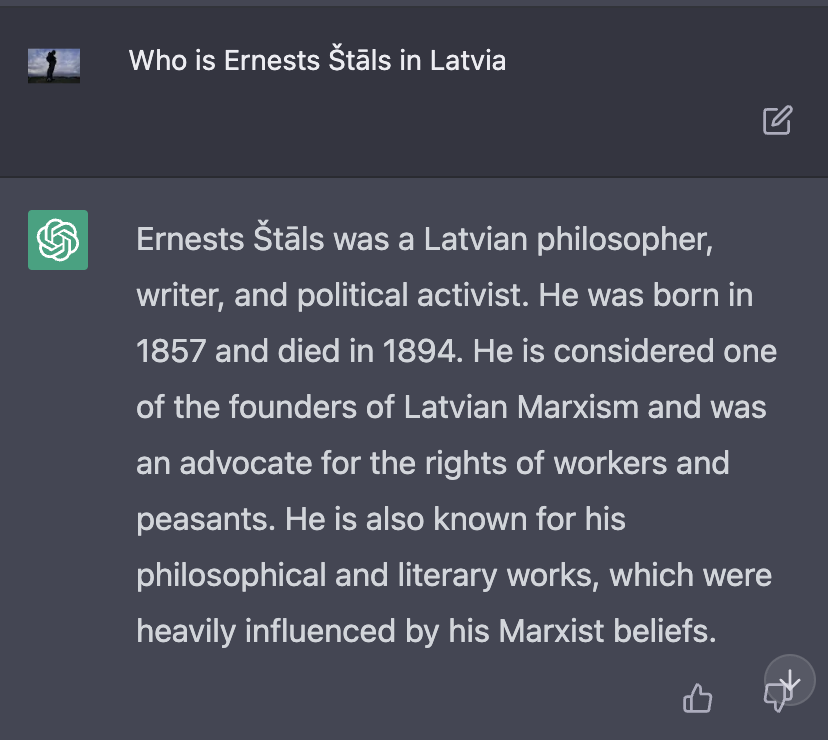

There are times when the input data is limited, and the algorithm will start to improvise and give determined answers that are nicely formatted but don't make sense and don't contain any valuable information. For example, it might generate a sentence about "Ernest, a philosopher and Marxist from the 19th century." However, there never was such a person. In this case, the model has produced a result that is technically correct, but it does not correspond to reality. This is because it has picked up on certain statistical signals that led it to create this sequence of words.

Model will have "wishful thinking" at times, where the algorithm is filling in gaps with information that "makes sense" based on the context, but that doesn't actually exist in reality. For example, I encountered situation where it generates a piece of code that uses several libraries, and in this code, there is a line where a certain object needs to be converted to a string. The line looks like this: text_str = object.to_text(). However, there is no to_text() function for this object. The model corrects itself and says that the correct function is object.to_string(), but this is also not the case. In this context, the algorithm has figured out that it "makes sense" to have such a function, but it doesn't actually exist.

The model will have an exact answer to the question you ask, but it won't correct you if your question is not heading in the right direction. It will answer your question with determination, and you might get the impression that you are on the right track. In real life, if you were to ask an industry professional, they might give you an indirect but explicit answer that you are looking in the wrong direction and should explore a different approach. With ChatGPT, you have to explicitly ask a more general question to get a broader perspective on the topic.

A good friend of Starwatcher, who has researched generative models and language models, put it nicely: these models are like aliens. We have to learn to understand how they see the world and how to communicate with them.

What are the implications?

This is going to be a very big deal. As Sam Altman (CEO of OpenAI) said, these kinds of solutions can make a serious challenge to Google for the first time.

Every company working in knowledge management is in jeopardy, or there are new opportunities for major breakthroughs, depending how you look at it.

Any information source containing static information which is relevant for long time, will become part of such models, including Wikipedia, food blogs, travel blogs, tutorials, and translation services. All of these services are now at risk of becoming obsolete, because the general knowledge they provide will be available through a general language model. If you are a food blogger, for example, you will need to bring something new to the table to stand out (Check out that boring steak recipe above). ChatGPT could give you some new insights about flavors, for example.

This is the key point: by figuring out how these models work and how to formulate questions, anyone can learn a lot and do it quickly. It's like having a personal teacher or mentor who doesn't get tired of your "stupid" questions.

ChatGPT and GPT-3 are general models, but the next wave will probably be niche-specific models that understand the specific context, entities, and how they work together in a particular industry.

For example, the legal industry has been a pioneer in the chatbot industry. In the future, there could be a chatbot for every legal jurisdiction that generates agreements with certain clauses for particular jurisdiction, or that provides an analysis of a document and its validity under a specific set of laws.

Language models are a subset of generative algorithm family. We have already seen generative algorithms for images, but there are also algorithms for other industries. In the case of language and image models, the algorithms are trained on a large dataset of information. In other industries, developers provide the constraints and the algorithm figures out the solution. It is like coding a meta program which will come up with solution itself. For example, Philippe Starck created a chair using a generative algorithm, and there have been similar examples in the engineering and architecture industries. These principles can be applied to other domains as well.

Implications for investors. You better start to understand how this works. When GPT3 arrived, there were a lot of startups who brought AI solutions to the table with magical capabilities. Now that there is ChatGPT available, everyone can give a prompt and see that some of those companies were not bunch of AI wizards but a middle layer between mere mortals and GPT3 API. Yes, formatting data, creating an interface but still a middle layer with far less added value.

Some investors are now building their own models that take advantage of these technologies. At Starwatcher we are also exploring opportunities in the field as we have European startup and investor database.

Implications for startups. As with every new wave of technologies, it gives a lot of opportunities. This will bring disruption to knowledge industries. Probably not by replacing humans all together but rather working as an intelligent assistants. Github CoPilot is perfect example. It is not an AI developer it self but it can help developers to be more efficient.

How far we are from General AI? Well, this could take a while. Scientists who pioneered these algorithms in last century (in other words - long, long time ago) say that we have to look for new direction to have a major breakthrough. If you want to learn more and go down the rabbit hole, here is talk from Association for the Advancement of Artificial Intelligence from 2020. Third from the top. Have fun, it is not as geeky you could imagine ;)

Conclusion

I would like to conclude by quoting Christopher Manning:

When humans think of an AI, people fixate on individual human brain. But humans and human society in 21st century aren't powerful because of an individual human brain. We are powerful because of development of language gave as opportunity to network human brains together. We could communicate between each other, share information and work together as teams."

Information has been at the core of human society development. We might have reached next milestone where we have figured out how to access this information in more simple manner. Is it perfect? Far from it. It will become better over time. Gartner has it in the hype cycle already.

Finally, did I use ChatGPT to prepare this article? I did! I asked it to fix grammar. Some of it came out better, some was generic and boring. It had become .... statistically mediocre. No style, no character. Just words in a sequence. I checked some facts and double checked them by good ol' googling.

Some additional materials:

- The Information: A History, A Theory, A Flood

- On Large Language Models for Understanding Human Language Christopher Manning

- The AI Revolution: The Road to Superintelligence

- OpenAI CEO Sam Altman | AI for the Next Era

- Artificial intelligence pioneer says we need to start over

- How GPT3 works, and its limitations